July 16th, 2025

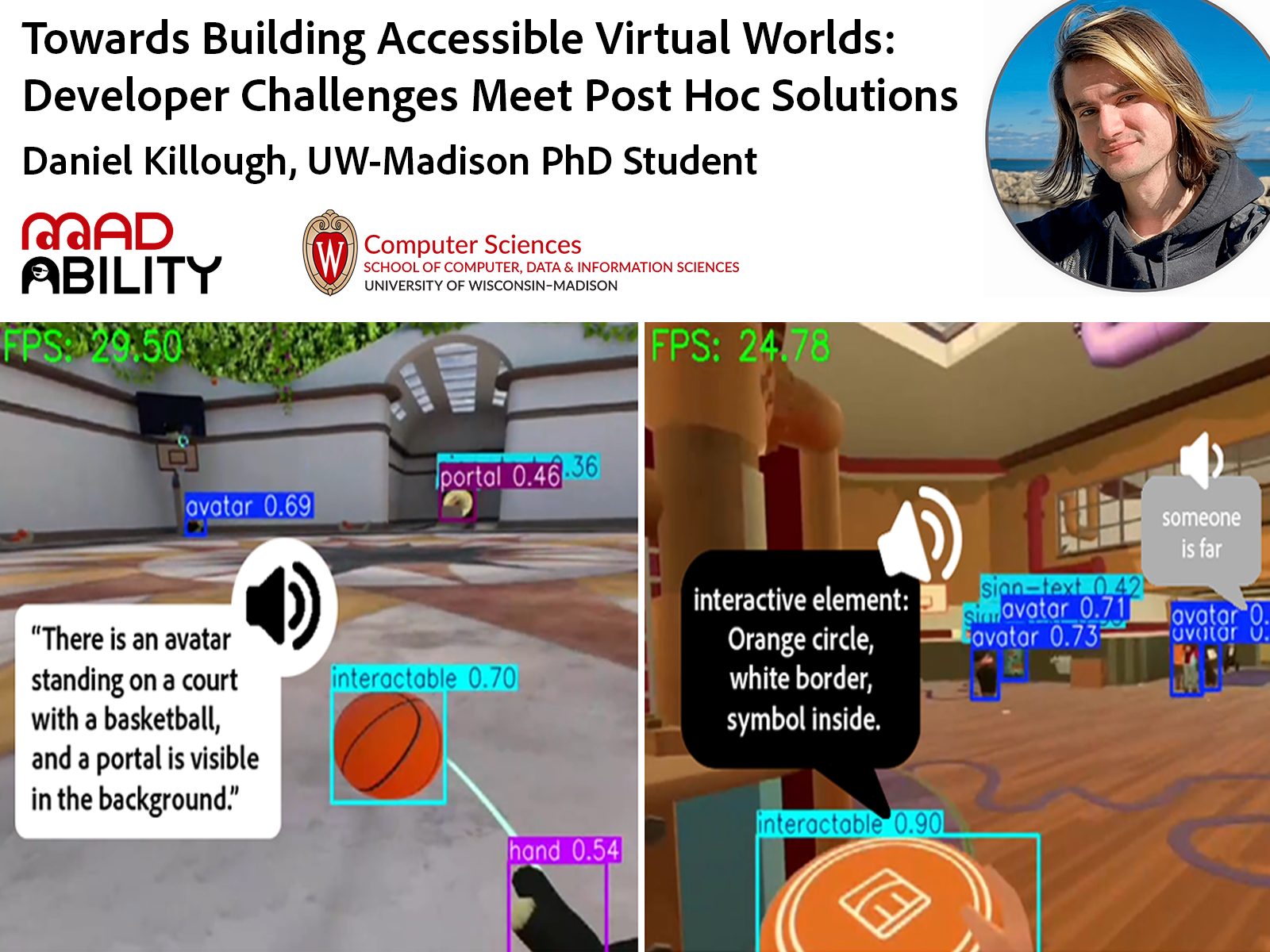

Join us as Daniel Killough of UW-Madison shares how his team has identified developer challenges in accessible XR and created a new tool, VRSight, that utilizes object detection, depth estimation, and multimodal LLMs to empower blind and low vision users.

Event Details

Date: Wednesday July 16th, 2025

Time: 10 am US Pacific / 1 pm US Eastern

Duration: 60 minutes

Location: Zoom

Extended reality applications remain inaccessible to people with disabilities due to development barriers. We offer two papers addressing this challenge: XR for All analyzes developer challenges across visual, cognitive, motor, speech, and hearing impairments, revealing obstacles preventing accessible XR implementation.

Recognizing this gap we developed VRSight, leveraging object detection, depth estimation, and multimodal large-language models to identify virtual objects for blind and low vision users without developer intervention. VRSight’s object detection is powered by our DISCOVR dataset of 17,691 social VR images.

We conclude by discussing future work including interaction design for VRSight and drag-and-droppable plugins for streamlined accessibility development.

If you require accommodations such as a sign language interpreter, please let us know ahead of time by emailing info@xraccess.org.

About the Speaker

Daniel Killough

Daniel is a third-year PhD student at UW-Madison in Dr. Yuhang Zhao’s MadAbility Lab. He combines HCI, XR, and AI to create accessible systems for people with disabilities, particularly blind and low vision users. He has presented at top conferences including CHI and ASSETS and seeks industry accessibility work post-PhD.