The Seventh Annual XR Access Symposium

June 26-27, 2025, New York City

XR Access 2025:

3D Diversity

3D Diversity

Read the 2025 Symposium Report to get all the details from the Symposium! With attendance statistics, plenary summaries, and amazing photography from the Symposium, it’s got everything you need to catch up on what you missed from this year’s event.

Video

Day 1 – June 26

Day 2 – June 27

Important Dates

All deadlines are at 11:59 PM US Eastern Time on the listed day.

Presentations

- Applications close: Friday February 28th

- Notification of chosen presenters: Rolling selections beginning Monday March 31st

- Finalize materials, including presentations, posters, and videos: Friday June 13th

Scholarships

- Applications close: Friday March 14th

- Scholarship decisions released: Monday April 14th

- Scholarship Round 2 applications close: Friday, May 30th

- Scholarship Round 2 decisions released: Friday, June 6th

- Reimbursement requests due (with receipts): Monday July 28th. Send questions to info@xraccess.org

Reimbursements paid: No later than Friday August 29th

Volunteers

- Applications close: Wednesday June 18th

- Volunteer training: Wednesday June 25th

Registration

- Early bird discount ends: Friday April 25th

- Final registration deadline: Friday June 20th

Schedule

June 26th, 2025

| Start Time (EDT) | Type | Title | People |

|---|---|---|---|

| 9:00 AM | Plenary | Welcome & Opening Remarks | Shiri Azenkot, Dylan Fox; XR Access |

| 9:20 AM | Plenary | Understanding How Blind and Low Vision Users Relate to and Use An AI-Powered Guide to Enhance VR Accessibility | Jazmin Collins, Cornell |

| 9:40 AM | Plenary | Beyond Access: Meaningful Accessibility in Video Games | Brian A. Smith, Columbia University |

| 10:00 AM | Plenary | Virtual Reality Interventions for Intersectional Stress Reduction AmongBlack Women | Judite Blanc, University of Miami Miller School of Medicine |

| 10:20 AM | Break | Snack Break | |

| 10:40 AM | Plenary | Designing Disability-Inclusive Avatars: Representing Chronic Pain Through Social VR Avatar Movement and Appearance | Ria Gualano, Cornell |

| 11:00 AM | Plenary | Cripping Up – How we are Using VR to Challenge Ableism | Meg Fozzard, Amy Crighton; independent |

| 11:20 AM | Plenary | Lemmings: Tools For Accessible Gestures | Justin Berry, Yale |

| 11:40 AM | Plenary | Augmented Reality Navigation System for Wheelchair Users | Anis Idrizović, Huiran Yu and Md Mamunur Rashid; University of Rochester |

| 12:00 PM | Break | Lunch | |

| 1:00 PM | Exhibit | Exhibits | |

| 2:40 PM | Breakouts | Breakout Discussions | |

| 3:40 PM | Break | Snack Break | |

| 4:00 PM | Plenary | Breakout Summaries | Breakout leaders |

| 4:20 PM | Plenary | XR for Autistic Inclusion: Balancing Innovation with Ethics | Nigel Newbutt, University of Florida |

| 4:40 PM | Plenary | Day 1 Closing | Shiri Azenkot, Dylan Fox; XR Access |

June 27th, 2025

| Start Time (EDT) | Type | Title | People |

|---|---|---|---|

| 9:00 AM | Plenary | Day 2 Opening | Dylan Fox, XR Access |

| 9:10 AM | Plenary | AI Advancement in Wearable and MR Technology | Agustya Mehta, Matthew Bambach; Meta Reality Labs |

| 9:30 AM | Plenary | Aesthetic Access for VR: Centering Disabled Artistry | Kiira Benz, Alice Sheppard; Double Eye Studios & Kinetic Light |

| 9:50 AM | Plenary | Accessibility Innovations for Extended Reality Drama and Documentary | Sacha Wares, Trial and Error Studio |

| 10:10 AM | Exhibits | Snack Break, Exhibits | |

| 11:40 AM | Plenary | I4AD: Diverse Perspectives from BLV Users on Designing Immersive, Interactive, Intelligent, and Individualized Audio Description Framework for VR Musical Performances | Khang Dang, New Jersey Institute of Technology |

| 12:00 PM | Plenary | Exploring Cognitive Accessibility in Mixed Reality for People with Dementia | Rupsha Mutsuddi, York University |

| 12:20 PM | Plenary | Immersive Environments for Resettlement | Milad Mozari, University of Utah; Krysti Nellermoe, International Rescue Committee |

| 12:40 PM | Plenary | Day 2 Closing | Shiri Azenkot, Dylan Fox; XR Access |

We encourage participants to join us after the end of the Symposium at 1pm on the 27th for an informal lunch at Anything at All restaurant next to the Graduate hotel, or to grab a bite at The Cafe at Cornell Tech and discuss your favorite talks.

Venue

Verizon Executive Education Center at Cornell Tech

2 West Loop Road

New York, NY 10044

The Verizon Executive Education Center at Cornell Tech redefines the landscape of executive event and conference space in New York City. This airy, modern, full-service venue offers convenience and ample space—with breathtaking views—for conferences, executive programs, receptions, seminars, meet-ups and more. Designed by international architecture firm Snøhetta, the Verizon Executive Education Center blends high design and human-centered technology to bring you an advanced meeting space suited for visionary thinkers.

Plenary Sessions

Understanding How Blind and Low Vision Users Relate to and Use An AI-Powered Guide to Enhance VR Accessibility

Jazmin Collins

Cornell University | PhD Candidate

Jazmin Collins is an Information Science PhD student, specializing in XR and game accessibility using AI for people with disabilities. She is advised by Dr. Shiri Azenkot in the Enhancing Ability Lab and Dr. Andrea Stevenson Won in the Virtual Embodiment Lab at Cornell University.

As social virtual reality (VR) grows more popular, it becomes increasingly important to address VR accessibility barriers for blind and low vision (BLV) users. Prior work has implemented VR guides, allowing human assistants to support BLV users in navigation and visual interpretation. However, human help may not always be available nor desirable for BLV users. In this work, we design an AI-powered guide that can provide typical guidance support, modify VR environments, and change its form and personality to suit user needs. We studied the use of our guide with 16 BLV participants and report on participants’ varying methods of integrating the guide into their VR experiences. We also note interesting patterns in participants’ treatment of the guide, such as placing it in negative social roles like a scapegoat and characterizing its errors with its virtual form. This work offers another step into exploring the design space of accessible guides in VR.

Beyond Access: Meaningful Accessibility in Video Games

Brian Smith

Columbia University | Assistant Professor of Computer Science

Brian A. Smith is a computer science professor at Columbia University. He develops computers that center and elevate the human experience. His expertise spans computer science, psychology, and game design, with work in accessibility, social computing, augmented reality, and smart cities. He has received multiple honors for research and teaching.

Accessibility is not just about functionality—it is about ensuring people with disabilities can fully partake in experiences that matter. In this talk, we will explore the concept of meaningful accessibility through the lens of game design, highlighting efforts from Columbia’s Computer-Enabled Abilities Laboratory (CEAL) to make video games accessible to blind and low-vision players. We will take a behind-the-scenes look at recent innovations such as Racing Auditory Display (RAD) and Surveyor that have earned major industry recognition. This case study offers a blueprint for achieving meaningful accessibility in many fields.

VR Interventions for Intersectional Stress Reduction Among Black Women

Dr. Judite Blanc

University of Miami Miller School of Medicine | Assistant Professor of Psychiatry and Behavioral Sciences

Dr. Judite Blanc is a multilingual Assistant Professor of Psychiatry and Behavioral Sciences at the University of Miami Miller School of Medicine, where she also serves as Head of Community Outreach at the Center for Translational Sleep and Circadian Sciences. She is the Founding Director of the Holistic Families Lab (HFL), an interdisciplinary research lab dedicated to advancing sleep health equity and stress science among historically marginalized populations.

Black women frequently face intersectional stressors (economic strain, caregiving burdens, racial/gender discrimination) linked to mental health, hypertension, and sleep issues. Digital mental health apps often inadequately address their unique needs. This research involved focus groups with 12 perinatal and 16 hypertensive Black women enrolled in two clinical trials assessing VR interventions: NurtureVR™ (mindfulness, relaxation, postpartum care) and First Resort (stress reduction, blood pressure management). Findings revealed themes on motherhood complexities, household/financial stress, social support, and notably, VR therapy’s escapism benefits. Preliminary results suggest culturally tailored VR-based interventions effectively overcome access barriers in low-income communities.

Designing Disability-Inclusive Avatars: Representing Chronic Pain Through Social VR Avatar Movement and Appearance

Ria Gualano

Cornell University | PhD Candidate

Ria Gualano is a PhD Candidate at Cornell University’s Department of Communication. She is a recipient of the 2025 Mellon/ACLS Dissertation Innovation Fellowship. Her research focuses on the communication of disability through disability arts and immersive technologies, and she has led two projects on disability representation through VR avatars.

Emerging from Cornell’s Enhancing Ability and Virtual Embodiment Labs, this paper focuses on representations of chronic pain through VR avatars. With recent movements toward disability as a social identity, we explore whether pain associated with chronic pain conditions (e.g., arthritis, Crohn’s disease, lupus) is also linked to identity and representation preferences. In prior work, VR users noted preliminary interest in how avatar movements related to their disabilities. This study is the first to focus on movement-based disability representation in VR. We interviewed 19 participants and found pain communication preferences result from perceptions of stigma, (in)visibility, identity and context. Participants were interested in translating self-accommodations from the physical world into avatar movement. Some used metaphorical movements to communicate pain and perceived avatars as an opportunity to transcend time and reality. We discuss implications for inclusive avatar design.

Cripping Up – How we are Using VR to Challenge Ableism

Meg Fozzard

Freelance | Producer

Amy Crighton

Cripping Up | Director

This talk will explore the evolution and motivation of “Cripping Up,” an interactive VR experience that invites the audience to embody wheelchair user Meg Fozzard making what would be a simple journey for someone able bodied, but for Meg feels like an odyssey. It challenges common narratives surrounding VR empathy by reminding the user that they, unlike Meg, are able to walk away. The term “cripping up,” often associated with non-disabled actors portraying disabled characters, takes on new meaning, highlighting the limitations of understanding that persist even in the apparently empathetic world of VR.

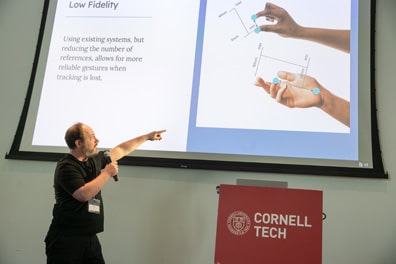

Lemmings: Tools For Accessible Gestures

Justin Berry

Yale Center For Immersive Technologies in Pediatrics | Project Director

Justin Berry is an artist, educator, creative producer, researcher, and game designer whose interdisciplinary work has been presented internationally in magazines, conferences, and museums. His current role is Creative Producer and Project Director for the Yale Center For Immersive Technologies in Pediatrics.

The tools that make development easy determine what gets developed. Lemmings is a tool for creating more accessible gesture and motion powered interactions, using Unity, that can leverage existing tracking solutions without being tethered to a particular version. Traditional gesture tracking relies on semantic configurations of the entire hand (such as in sign language). It can be difficult for some individuals to form such configurations with their hands. We bypass this limitation by breaking down these complex forms into a small number of tracked features that can function with much lower fidelity. It is the relationship between these features that matters, not specific configurations, which means that users can remap them in ways that are accessible and comfortable. A pinch can become a turn of the head; a stretch of the arm can be replaced by the opening of one’s palm. We are building Lemmings and want to make it open source, so that everyone can use it.

Augmented Reality Navigation System for Wheelchair Users

Anis Idrizovic

University of Rochester | PhD Student

Md Mamunur Rashid

University of Rochester | PhD Student

Huiran Yu

University of Rochester | PhD Student

There are currently no commercial or otherwise generally available software tools for accessible navigation, posing a significant challenge to wheelchair users. We introduce an Augmented Reality (AR) Navigation App to assist wheelchair users in navigating the University of Rochester campus. Key accessibility data was collected in collaboration with LBS tech – a Korean company focused on the use of location-based services for enabling accessible navigation. The collected data include information about and photographs of different routes, building entrances, and staircases to ensure appropriate navigation directions. We worked with an accessibility advocate to ensure the app provides an optimal user experience. The app features wheelchair-accessible navigation, as demonstrated in testing by a wheelchair user. Additional features include estimated travel time to destination, AR-enhanced visualization of building entrances and navigation paths, and voice feedback for hands-free operation.

XR for Autistic Inclusion: Balancing Innovation with Ethics

Nigel Newbutt

University of Florida | Assistant Professor

Dr. Newbutt is B.O. Smith Research Professor and Director of the Equitable Learning Technology Lab at the University of Florida. His research explores technologies for autistic and neurodivergent populations, focusing on virtual reality headsets to support daily life. He emphasizes user input to shape inclusive, practical VR applications.

As XR technologies evolve, ensuring their accessibility and ethical application for diverse users—including neurodiverse individuals—is crucial. This talk will examine how immersive experiences can be designed ethically and inclusively for autistic users, balancing innovation with considerations like privacy, sensory needs, and informed consent. Extended Reality (XR) offers new possibilities for supporting autistic individuals (and many others with neurodiversity), yet its use raises critical ethical considerations for researchers and developers. This talk will explore key concerns in XR research and application with autistic populations, including privacy, security, content regulation, psychological well-being, informed consent, realism, sensory overload, and accessibility. While XR presents opportunities for education, employment, and social engagement, it’s crucial we ensure responsible development and balancing innovation with ethical responsibility.

AI Advancement in Wearable and MR Technology

Agustya Mehta

Meta Reality Labs | Director, Hardware

Matthew Bambach

Meta Reality Labs | AX Design Lead, Wearables

Prince Gupta

Meta Reality Labs | Group Product Manager, Research

Join Meta design and engineering leads for a dive into the latest advancements in AI-powered wearable and mixed reality (MR) technology. We will showcase new features and discuss the future potential of wearable and MR tech, including its translation to AR to drive accessible XR experiences.

Aesthetic Access for VR: Centering Disabled Artistry

Kiira Benz

Double Eye Studios | Founder & Creative Director

Alice Sheppard

Kinetic Light | Founder & Artistic Director

We will share technical innovations to produce ground-breaking aesthetic access from pre-production to virtual worldbuilding. “territory” is an equitably accessible VR experience which can serve as a case study for future productions. The first PCVR experience to use Meta Haptics Studio, “territory” created narrative haptics in parallel with music and sound design. The session will explore the artistry of audio description and artistic closed captions. Sharing our approach to disability aesthetics, the panel will offer general techniques for creators interested in making accessible work.

Accessibility Innovations for Extended Reality Drama and Documentary

Sacha Wares

Trial and Error Studio | Director

Sacha is the founder of Trial and Error Studio through which she is developing a number of XR (extended reality) projects including Inside: The Life and Work of Judith Scot (IDFA Forum Award 2020). She was associate director of the National Theatre’s Immersive Storytelling Studio and digital innovation associate at English Touring Theatre. Previously, associate director of the Royal Court Theatre (2007 – 2013) and an associate at the Young Vic Theatre (2010 – 2017).

Sacha Wares, a leader in the field of directing for extended reality, will discuss two recent projects in which the editorial themes and novel formats demanded XR accessibility innovations. Museum of Austerity is a mixed reality exhibition exploring links between the deaths of disabled people and changes to the UK benefit system. Audiences are offered a menu of access options, including in-headset captions, spatialised audio description and the option to remove sensitive content. Inside, by contrast, is a dialogue-free multisensory VR docu-drama about sculptor Judith Scott. This production is underpinned by Innovate funded R&D exploring the application of Universal Design principles to XR narrative. Audiences can select spatialised audio description while a range of multisensory inputs – temperature, wind, haptics – guide the audience’s attention, acting as alternatives to traditional text/audio attentional prompts. In addition to a Talk, we propose to share a Demo of Inside.

I4AD: Diverse Perspectives from BLV Users on Designing Immersive, Interactive, Intelligent, and Individualized Audio Description Framework for VR Musical Performances

Khang Dang

New Jersey Institute of Technology | PhD Candidate

As a Ph.D. candidate at New Jersey Institute of Technology, Khang Dang focuses on human–computer interaction, using mixed-methods research to inform data-driven, user-centered solutions. He enjoys developing accessible XR applications and helpful AI agents that intuitively make people’s daily lives a little easier and a touch more melodic.

In this talk, I will introduce the I4AD framework, built on four key components: Immersion, Interactivity, Intelligence, and Individualization. This framework rethinks audio descriptions (AD) for Virtual Reality (VR) musical performances by actively involving blind and low-vision (BLV) participants throughout the design process. Drawing on feedback and insights from BLV users and professionals in our prior work (ASSETS’24 Technical & Demo, SUI’23), I will demonstrate how I4AD integrates spatial audio techniques, adaptive interaction strategies, and the implementation of intelligent system responses, including an AI-driven conversational feature for real-time voice interactions. The framework also supports the personalization of AD delivery based on each user’s preference profile. This session will discuss both the challenges and exciting opportunities in creating inclusive VR musical experiences, demonstrating how incorporating diverse BLV perspectives can lead to richer outcomes.

Exploring Cognitive Accessibility in Mixed Reality for People with Dementia

Rupsha Mutsuddi

York University | PhD Student

Rupsha is a Doctoral Student at the School of Global Health at York University and a Graduate Scholar at the Dahdaleh Institute for Global Health Research where her work focuses on Human-Centered Design to improve the quality of life for people living with dementia in global communities. Rupsha has worked for a diverse array of clients in government, healthcare, non-profit, and clean beauty space, including the Detox Market, Habitat for Humanity Waterloo Region, and the Ontario Public Health Association.

Mixed Reality (MR) are a subset of technologies which allow for digital elements like audio/images to be layered on the real world. MR holds promise in helping People Living with Dementia (PwD) engage in everyday activities by offering assistive modalities. MR should be designed to be cognitively accessible for PwD. Our research explores the outcomes of cognitively accessible systems for older adults living with early-stage dementia or mild cognitive impairment in MR. Based on these findings, we offer recommendations on how to design cognitively accessible MR applications for older adults living with early stage dementia or mild cognitive impairment.

Immersive Environments for Resettlement

Milad Mozari

University of Utah | Assistant Professor

Krysti Nellermoe

International Rescue Committee | Senior Training Officer

In its seventh year, this project is an initiative between University of Utah and Internationally Rescue Committee to bridge the digital divide and foster career opportunities in design and technology for refugee and immigrant communities. The project creates flexible and scalable pathways for digital literacy, exposure to emerging technologies, and community engagement through a unique combination of virtual public spaces, game-based learning, and a co-creation model. To date, the core team has co-created multiple immersive VR 360° videos with service providers in multiple cities and newcomer input, simulating real-life situations such as visiting a doctor’s office, navigating a school, and using public transportation. These videos will be accessible to newcomer refugee and immigrant communities via an immersive game, Juniper City, that will help newcomers navigate a simulated U.S.

Breakout Sessions

The Breakout Discussions are a chance for Symposium attendees to gather and share their insights on important issues of the day. Though they are led by moderators, these are intended as a way for everyone to contribute their expertise equally. The Breakout Discussions are unfortunately for in-person attendees only, though we encourage remote attendees to have discussions of their own on Slack.

The Breakout Discussions will take place 2:40-3:40pm ET on Thursday June 26th, spread throughout the Verizon Event Center.

| Title | Moderators | Description | Location |

|---|---|---|---|

| Disability, Content Creation, and the Arts | Steven McCoy, Spoken Heroes

Kiira Benz, Double Eye Studios |

This session will explore various topics, including identifying barriers faced by creators with disabilities in the XR industry, as well as successful initiatives, technologies, and design strategies that improve XR accessibility. | Gallery |

| Neurodiversity and XR | Nigel Newbutt, University of Florida

Logan Ashbaugh, CSU San Bernadino |

This session will explore the often-overlooked intersection of neurodiversity and XR, inviting dialogue on the emerging opportunities, challenges, and ethical imperatives for creating immersive technologies that are genuinely inclusive of neurodivergent populations. | Lobby |

| XR/AI Policy & Standards | Larry Goldberg, Independent

Michael Bervell, TestParty Peirce Clark, XR Association |

This session will explore emerging discussions around recent policy and standards initiatives to promote inclusive and beneficial AI implementations as well as metaverse/extended reality (XR) technologies. | Classroom 225 |

| Accessible Game Design | Brian Smith, Columbia | This session will explore what is needed for meaningful game accessibility, developing shared principles that can guide research and industry. | Auditorium |

| XR in Healthcare | Judite Blanc, University of Miami Miller School of Medicine

Chelsea Twan, NYU Langone Health |

This session will explore strategies for ensuring equitable access to XR technologies—including augmented and virtual reality—in healthcare, with a focus on bridging the digital divide and promoting inclusivity across diverse demographic groups. | Auditorium |

Sponsors

XR Access Symposia can’t happen without the support of our generous sponsors. Visit our sponsorship page to learn about the many benefits of sponsoring the Symposium.

Platinum Sponsors

Frequently Asked Questions

How do I get to the venue?

Public transportation by subway, ferry, or tram is recommended. See this transportation guide for details.

Will the main stage presentations be recorded?

Yes, they will be streamed live via Zoom and added to the XR Access YouTube channel after the conference.

No, unfortunately our recording equipment is not suited to capturing multiple small groups. However, the takeaways will be included in the Symposium Report.

Unfortunately, events in the physical world are expensive; the Symposium will cost tens of thousands of dollars. However, if the expense is a hardship for you, please apply for a scholarship using the link in the description. Note that registering for Zoom and watching online is still free.