Authors: Ricky Gonzalez, Jazmin Collins, Shiri Azenkot, Cindy Bennet

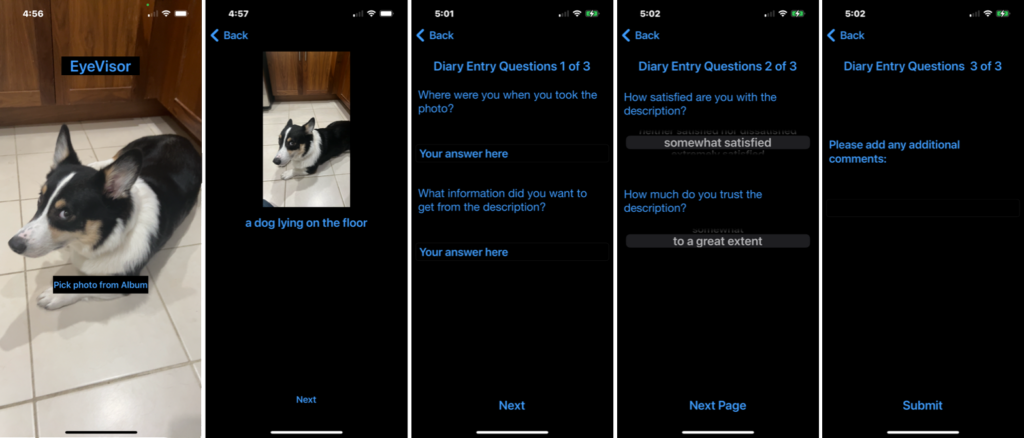

Cornell Tech PhD Students Ricky Gonzalez and Jazmin Collins, co-founder of XR Access Shiri Azenkot, and Accessibility Researcher at Google Cindy Bennet are investigating the potential of AI to describe scenes to blind and low vision (BLV) people. The team developed an iOS application called Eyevisor that simulated the use of SeeingAI to collect data about why, when, and how BLV people use AI to describe visuals to them.

The study’s results points to a variety of unique use cases for which BLV users would prefer to use AI rather than human assistance, such as:

- Detecting disgusting, dirty, and potentially dangerous things in the environment

- Solving disputes between blind and low vision friends that require visual information

- Avoiding awkward situations (touching a sleeping person)

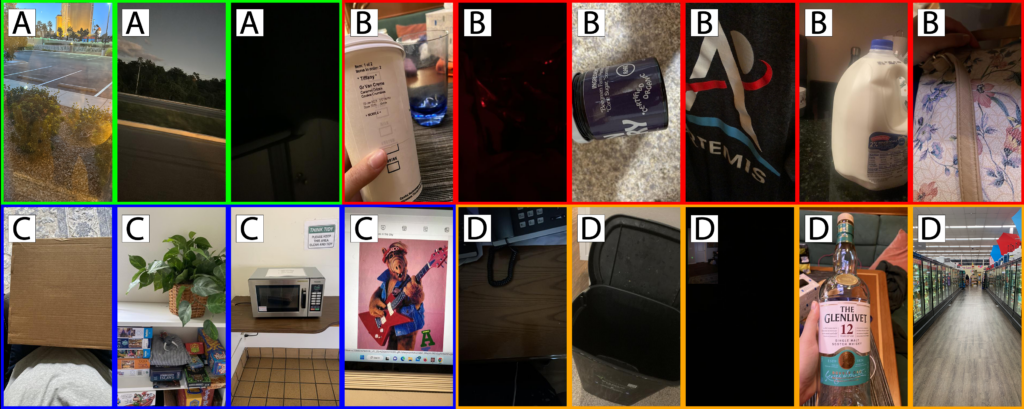

During the diary study, participants submitted entries with different goals. These examples represent four of the most common goals:

- (A) Getting a scene description

- (B) Identifying features of objects

- (C) Obtaining the identity of a subject in the scene

- (D) Learning about the application

The scene description application we used to collect data. Screenshots show the flow of using the application and submitting a diary entry. It includes five screens: the photo submission, the photo description, and three diary entry question screens. The interface was designed to group similar questions, while minimizing the number of elements on each screen.

Scene description applications and the AI powering them are changing rapidly, especially with continuing advancements in generative and non-generative AI. As these technologies grow, it remains important to investigate how users make use of these technologies, what their needs are, and what their goals when using AI. Our work guides advancements in scene description and establish a more useful baseline of visual interpretation for BLV users and their daily needs.

This work will be presented at the ACM CHI conference in Hawaii on May 11-16, 2024. Contact Ricardo Gonzalez at reg258 [at] cornell.edu for more information.